i will propose a new mathematical algorithm based on rsa encoding but with matrixes

the parameters for a written text we should to send are number of rows in wich is divided the matrix one number is equivalent to one complete stand alone word, the number of columns of the matrix

the system verifies that A (source nonencripted word matrix) * (B (encripted matrix) ) = (E+H) (private key diagonal not prime numbers matrix with high values the higher they are the difficlut to unencrypt) I in which C=D+E,F=G+H witch are two matrixes that are also diagonal and are composed by primes and satisfies conjecture of goldback , D=private key , E = public key,F,G private keys H public key same correspondence and satisfaction than C=D+E

A=B^-1=A^t/|A|=>Aij*Aij^t/|A|=

so now we can obtained both encoding for encrypted and not encrypted text as well as public/private keys once defined C and F

so above ec. is trivially solved with down article

ANOTHER ORTHOGONAL SIMPLISTIC APPROACH TO COMPRESSING DATA SENDING JUST THE EIGENVALUES OF THE EIGENVECTORS OF AN EUCLIDEAN SPACE NxN MATRIX.

Just by thinking a little bit i found a linnear example that resulst in a quickest calculation way.the idea is very simplistic and based in the diagonal expression of a N equations of N terms in an appropiate Base space.

thus we have the packet of data composed bya matrix

A=(a00 a10 a20 a30...an0)

(a01 a11 a21 a31..an1)

(..................................)

(a0n an1 an2 an3..ann) the biggest the matrix higher compression its reached the rate of compression is by (N-1)*N kb per N kb

the matrix A is in base (e0,e1,..,en)in another euclidean base B can be expressed as BAB^-1=(lambdai) diagonal matrix

=BAB^t/B=(lambda1,lambda2,lambda3,..,lambdan)*I where I is the identity matrix and we also have the fact that |A-lambda*I|=0 and

B^-1*(lambda1,lambda2,lambda3,..,lambdan)*I*B=B^t*B/B*(lambda)=B^t*(lambda)=A

|(enn 0 ..0..0.....0|lambda1 0 0 0)|

|(0 e(n-1)(n-1) 0| 0 lambda2 0 0)|=0

|(... ... ... ... | 0 0 ............... 0)|

|(0.. 0. ... 0 ... e0 | 0 0 0 lambdan)|

wich is the compressing/decompressing varmonde determinant

so for any linear combination of rows and columns with lambdai=A

we obtain a linear combination of the base (e1,e2,..,en) base B can be rewritten from base lambda thus e1+e2=d1,e3+e6-e2=d2...e5+e2-e1=dn for example.

so for data sent into a matrix m>n x n in wich the new subpacket (row or column) is a linnear combination of others can be simplified and begun compresed indicating the linear combination of the basesubi and the eigenvalue lambda

happy compressing!!

thus for any linnear combination of |A-lambda*I|=0

|(enn 0 ..0..0.....0|lambda1 0 0 0)|

|(0 e(n-1)(n-1) 0| 0 lambda2 0 0)|=0

|(... ... ... ... | 0 0 ............... 0)|

|(0.. 0. ... 0 ... e0 | 0 0 0 lambdan)|

|( 1 1 0 1 |= (enn +e(n-1)+e0)*1 -> this byte can be precalc

|(a00 a01 a02...a0n|lambda1 0 0 0)|

|(0 a(11) a12...a1n| 0 lambda2 0 0)|=|A|

|(... ... ... ... ...| 0 0 ............... 0)|

|(an0 an1 an2... ann| 0 0 0 lambdan)|

|( 1 1 0 1 |= (enn +e(n-1)+e0)*1 -> this byte can be precalc

|(enn 0 ..0..0.....0|b00a00bnn/b00.0 0 0)|

|(0 e(n-1)(n-1) 0| 0 b11a00b(n-1)(n-1)/b11 0 0)|=I

|(... ... ... ... | 0 0 ..,,,,,,,,,,,,,,,. ............ 0)|

|(0.. 0. ... 0 ... e0 | 0 0 0 bnnannb00/bnn)|

|( 1 1 0 1 |= (enn +e(n-1)+e0)*1 -> this byte can be precalc

another interesting option is to calc in base the previous compresed rows in based a recurrence i.e enn=e(n-1)-e(n-2)/e1n etc

this have important implications if we decomposed lambda1*lamba2*lambda3*..*lambdan=2^a*3^b*5^c*..p(k-1)^z=(pk)-1

can be obtaining all linnear combinations by applying the rule of its determinant

Determinant

From Wikipedia, the free encyclopedia

This article is about determinants in mathematics. For determinants on epidemiology, see Risk factor.

In algebra, the determinant is a special number associated with any square matrix. The fundamental geometric meaning of a determinant is a scale factor or coefficient for measure when the matrix is regarded as a linear transformation. Thus a 2 × 2 matrix with determinant 2 when applied to a set of points with finite area will transform those points into a set with twice the area. Determinants are important both in calculus, where they enter the substitution rule for several variables, and in multilinear algebra.

When its scalars are taken from a field F, a matrix is invertible if and only if its determinant is nonzero; more generally, when the scalars are taken from a commutative ring R, the matrix is invertible if and only if its determinant is a unit of R. Determinants are not that well-behaved for noncommutative rings.

The determinant of a matrix A is denoted det(A), or without parentheses: det A. An alternative notation, used for compactness, especially in the case where the matrix entries are written out in full, is to denote the determinant of a matrix by surrounding the matrix entries by vertical bars instead of the usual brackets or parentheses. Thus

denotes the determinant of the matrix

denotes the determinant of the matrix

For a fixed nonnegative integer n, there is a unique determinant function for the n×n matrices over any commutative ring R. In particular, this unique function exists when R is the field of real or complex numbers.

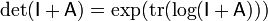

[edit]Interpretation as the area of a parallelogram

The 2×2 matrix

has determinant

If A is a 2x2 matrix, its determinant det A can be viewed as the oriented area of the parallelogram with vertices at (0,0), (a,b), (a + c, b + d), and (c,d). The oriented area is the same as the usual area, except that it is negative when the vertices are listed in clockwise order.

Further, the parallelogram itself can be viewed as the unit square transformed by the matrix A. The assumption here is that a linear transformation is applied to row vectors as the vector-matrix product xTAT, wherex is a column vector. The parallelogram in the figure is obtained by multiplying matrix A (which stores the co-ordinates of our parallelogram) with each of the row vectors  and

and  in turn. These row vectors define the vertices of the unit square. With the more common matrix-vector product Ax, the parallelogram has vertices at

in turn. These row vectors define the vertices of the unit square. With the more common matrix-vector product Ax, the parallelogram has vertices at  and

and  (note thatAx = (xTAT)T ).

(note thatAx = (xTAT)T ).

and

and  in turn. These row vectors define the vertices of the unit square. With the more common matrix-vector product Ax, the parallelogram has vertices at

in turn. These row vectors define the vertices of the unit square. With the more common matrix-vector product Ax, the parallelogram has vertices at  and

and  (note thatAx = (xTAT)T ).

(note thatAx = (xTAT)T ).

Thus when the determinant is equal to one, then the matrix represents an equi-areal mapping.

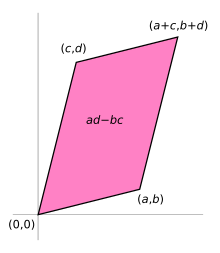

[edit]3-by-3 matrices

The determinant of a 3×3 matrix

is given by

The rule of Sarrus is a mnemonic for this formula: the sum of the products of three diagonal north-west to south-east lines of matrix elements, minus the sum of the products of three diagonal south-west to north-east lines of elements when the copies of the first two columns of the matrix are written beside it as below:

This mnemonic does not carry over into higher dimensions.

[edit]n-by-n matrices

The determinant of a matrix of arbitrary size can be defined by the Leibniz formula (as explained in the next paragraph) or the Laplace formula.

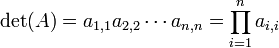

The Leibniz formula for the determinant of an n-by-n matrix A is

Here the sum is computed over all permutations σ of the set {1, 2, ..., n}. A permutation is a function that reorders this set of integers. The position of the element i after the reordering σ is denoted σi. For example, for n = 3, the original sequence 1, 2, 3 might be reordered to S = [2, 3, 1], with S1 = 2, S2 = 3, S3 = 1. The set of all such permutations (also known as the symmetric group on n elements) is denoted Sn. For each permutation σ, sgn(σ) denotes the signature of σ; it is +1 for even σ and −1 for odd σ. Evenness or oddness can be defined as follows: the permutation is even (odd) if the new sequence can be obtained by an even number (odd, respectively) of switches of numbers. For example, starting from [1, 2, 3] and switching the positions of 2 and 3 yields [1, 3, 2], switching once more yields [3, 1, 2], and finally, after a total of three (an odd number) switches, [3, 2, 1] results. Therefore [3, 2, 1] is an odd permutation. Similarly, the permutation [2, 3, 1] is even ([1, 2, 3] → [2, 1, 3] → [2, 3, 1], with an even number of switches). It is explained in the article on parity of a permutation why a permutation cannot be simultaneously even and odd.

In any of the n! summands, the term

is notation for the product of the entries at positions (i, σi), where i ranges from 1 to n:

For example, the determinant of a 3 by 3 matrix A (n = 3) is

This agrees with the rule of Sarrus given in the previous section.

The formal extension to arbitrary dimensions was made by Tullio Levi-Civita, see (Levi-Civita symbol) using a pseudo-tensor symbol. An alternative, but equivalent definition of the determinant can be obtained by using the following theorem:

- Let Mn(K) denote the set of all

matrices over the field K. There exists exactly one function

matrices over the field K. There exists exactly one function - with the two properties:

- F is alternating multilinear with regard to columns;

One can then define the determinant as the unique function with the above properties.

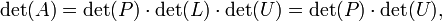

[edit]Determinant from LU decomposition

For matrices larger than 3x3 it is computationally cheaper (in number of operations conducted) to express, if possible, PA = LU, where P is a permutation matrix, L is a lower triangular matrix with diagonal elements equal to 1, and U is an upper triangular matrix. See LU decomposition for details on existence, uniqueness and algorithms for such a decomposition.

The determinant is then calculated as follows:

because det(L) = 1; the right hand side is easily computed as the product of all diagonal elements of U multiplied with the determinant of the permutation matrix P (which is +1 for an even permutation and is -1 for an odd permutation). This is more efficient than calculating the determinant of A because the determinant of a (upper or lower) triangular matrix is the product of its diagonal elements.

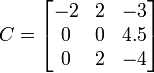

A small example:

Therefore

Of course, for a 2-by-2 matrix the determinant is easily computed directly, 6 × 3 − 3 × 4 in this example, but for large matrices the LU decomposition produces significant computational savings: it reduces the number of operations from O(n!) for the Leibniz formula to O(n3).

[edit]Applications

Determinants are used to characterize invertible matrices (i.e., a matrix is invertible if and only if it has a non-zero determinant), and to explicitly describe the solution to a system of linear equations with Cramer's rule:

For a matrix equation

the solution is

where Ai is the matrix formed by replacing the ith column of A by the column vector b.

Determinants are useful to state solution properties. There are, however, much better numerical algorithms available to compute an inverse matrix and/or the solution of a system of linear equations, based on LU, QR, or singular value decomposition. These algorithms solve a linear equation system in O(n3), whereas Cramer's rule is O(n4).

Determinants can be used to find the eigenvalues of the matrix A through the characteristic polynomial

where I is the identity matrix of the same dimension as A.

Again, this formula is useful to state the properties of eigenvalues, but not to numerically compute them if n > 2. It is much more efficient and reliable to compute eigenvalues, e.g., with the QR algorithm.

One often thinks of the determinant as assigning a number to every sequence of n vectors in  , by using the square matrix whose columns are the given vectors. With this understanding, the sign of the determinant of a basis can be used to define the notion of orientation in Euclidean spaces. The determinant of a set of vectors is positive if the vectors form a right-handed coordinate system, and negative if left-handed.

, by using the square matrix whose columns are the given vectors. With this understanding, the sign of the determinant of a basis can be used to define the notion of orientation in Euclidean spaces. The determinant of a set of vectors is positive if the vectors form a right-handed coordinate system, and negative if left-handed.

, by using the square matrix whose columns are the given vectors. With this understanding, the sign of the determinant of a basis can be used to define the notion of orientation in Euclidean spaces. The determinant of a set of vectors is positive if the vectors form a right-handed coordinate system, and negative if left-handed.

, by using the square matrix whose columns are the given vectors. With this understanding, the sign of the determinant of a basis can be used to define the notion of orientation in Euclidean spaces. The determinant of a set of vectors is positive if the vectors form a right-handed coordinate system, and negative if left-handed.

Determinants are used to calculate volumes in vector calculus: the absolute value of the determinant of real vectors is equal to the volume of the parallelepiped spanned by those vectors. As a consequence, if the linear map  is represented by the matrix A, and S is any measurable subset of

is represented by the matrix A, and S is any measurable subset of  , then the volume of f(S) is given by

, then the volume of f(S) is given by  . More generally, if the linear map

. More generally, if the linear map  is represented by the m-by-n matrix A, and S is any measurable subset of

is represented by the m-by-n matrix A, and S is any measurable subset of  , then the m-dimensional volume of f(S) is given by

, then the m-dimensional volume of f(S) is given by  . By calculating the volume of the tetrahedron bounded by four points, they can be used to identify skew lines.

. By calculating the volume of the tetrahedron bounded by four points, they can be used to identify skew lines.

is represented by the matrix A, and S is any measurable subset of

is represented by the matrix A, and S is any measurable subset of  , then the volume of f(S) is given by

, then the volume of f(S) is given by  . More generally, if the linear map

. More generally, if the linear map  is represented by the m-by-n matrix A, and S is any measurable subset of

is represented by the m-by-n matrix A, and S is any measurable subset of  , then the m-dimensional volume of f(S) is given by

, then the m-dimensional volume of f(S) is given by  . By calculating the volume of the tetrahedron bounded by four points, they can be used to identify skew lines.

. By calculating the volume of the tetrahedron bounded by four points, they can be used to identify skew lines.

The volume of any tetrahedron, given its vertices a, b, c, and d, is (1/6)·|det(a − b, b − c, c − d)|, or any other combination of pairs of vertices that would form a spanning tree over the vertices.

[edit]Properties characterizing the determinant

In addition to the Leibniz formula above, there is another way of calculating determinants of matrices. It is based on the following properties of determinants:

- If A is a triangular matrix, i.e. ai,j = 0 whenever i > j or, alternatively, whenever i < j, then

,

,

, for any permutation σ different from the identity permutation (the one not changing the order of the numbers 1, 2, ..., n)

, for any permutation σ different from the identity permutation (the one not changing the order of the numbers 1, 2, ..., n) - If B results from A by interchanging two rows or two columns, then det(B) = −det(A).

- If B results from A by multiplying one row or column with a number c, then det(B) = c · det(A).

- If B results from A by adding a multiple of one row to another row, or a multiple of one column to another column, then

These four properties can be used to compute determinants of any matrix, using Gaussian elimination. This is an algorithm that transforms any given matrix to a triangular matrix, only by using the operations in the last three items. Since the effect of these operations on the determinant can be traced, the determinant of the original matrix is known, once Gaussian elimination is performed.

It is also possible to expand a determinant along a row or column using Laplace's formula, which is efficient for relatively small matrices. To do this along row i, say, we write

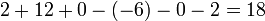

[edit]Example

The following compares various ways of calculating the determinant of the three-by-three matrix

Method

|

Calculation

| ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Rule of Sarrus

|

| ||||||||||||

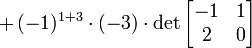

Laplace expansion, along the second column (for ease of calculation, it is best to choose a row or column with many zeros)

In this case we see that j=2 and i will change as we move down the column. The last value will be zero and is not necessary to calculate (due to a 0 coefficient).

|

| ||||||||||||

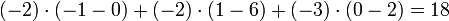

Or, by using the first row:

|

| ||||||||||||

Gauss algorithm

|   B is obtained from A by adding −1/2 × the first row to the second, so that det(A) = det(B) B is obtained from A by adding −1/2 × the first row to the second, so that det(A) = det(B) C is obtained from B by adding the first to the third row, so that det(C) = det(B) C is obtained from B by adding the first to the third row, so that det(C) = det(B) D is obtained from C by exchanging the second and third row, so that det(D) = −det(C) D is obtained from C by exchanging the second and third row, so that det(D) = −det(C)

The determinant of the (upper) triangular matrix D is the product of its entries on the main diagonal: (−2) · 2 · 4.5 = −18. Therefore det(A) = +18.

|

[edit]Further properties

[edit]Basic properties

In this section all matrices are assumed to be n-by-n matrices.

The determinant of the identity matrix

is one. This is even true if n = 0, even though I0 is an empty matrix.

The determinant is linear in each row of the matrix and also in each column of the matrix (in other words, when viewed as a function of the row of the matrix, the determinant is a multilinear map, and also when viewed as a function of the columns of the matrix). This means that if one fixes any row or column, and writes that row/column v of A as a linear combination of row/column vectors vk, then det(A) is equal to the same linear combination of the determinants of the matrices obtained from A by replacing v by vk while leaving all other entries unchanged. In particular, multiplying any individual row or column by r multiplies the determinant by r, implying that

The determinant is an alternating function of the rows of the matrix, and also of the columns of the matrix: whenever a matrix contains two identical rows or two identical columns, its determinant is 0. The rules given for the behaviour of the determinant under row and column operations follow from the multilinear and alternating properties. In fact the determinant can be characterized as the unique map from square matrices to scalars with the three properties listed so far.

The determinant of a rank-deficient matrix (one with rank less than n) is zero.

The determinant of a matrix product of square matrices equals the product of their determinants:

Thus the determinant is a multiplicative map. This formula is generalized by the Cauchy-Binet formula to (square) products of rectangular matrices.

A matrix over a commutative ring R is invertible if and only if its determinant is a unit in R. In particular, if A is a matrix over a field, such as the real or complex numbers, then A is invertible if and only if det(A) is not zero. In this case

An important implication of the previous properties is the following: if A and B are similar, i.e., if there exists an invertible matrix X such that  , then

, then

, then

, then

This means that the determinant is a similarity invariant. Because of this, the determinant of some linear transformation T : V → V for some finite dimensional vector space V is independent of the basis for V.

If A is triangular, then its determinant is the product of its diagonal entries.

A matrix and its transpose have the same determinant:

The determinant is a natural transformation: for any ring homomorphism ƒ:R→S and any square matrix A with entries in R, the determinant of the matrix obtained by applying ƒ to each of the entries of A equals ƒ(det(A)). This means in particular for matrices with polynomials as entries, that substitution of a value for the indeterminate in the determinant of the matrix gives the same result as first performing that substitution into all entries of the matrix and taking the determinant of the resulting matrix; it is thanks to this property that one sees that the roots of the characteristic polynomial of a matrix are precisely the eigenvalues of the matrix. Other applications of this property are for complex matrices: the determinant of its complex conjugate matrix (or of its conjugate transpose) is the complex conjugate of its determinant, and for integer matrices: the reduction modulo m of the determinant of such a matrix is equal to the determinant of the matrix reduced modulo m (the latter determinant being computed using modular arithmetic).

If A has real or complex entries, then det(A) is the product of the eigenvalues of A, counted with their algebraic multiplicities.

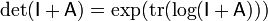

[edit]Sylvester's determinant theorem

Sylvester's determinant theorem states that for A, an m-by-n matrix, and B, an n-by-m matrix,

,

,

where  and

and  are the m-by-m and n-by-n identity matrices, respectively.

are the m-by-m and n-by-n identity matrices, respectively.

and

and  are the m-by-m and n-by-n identity matrices, respectively.

are the m-by-m and n-by-n identity matrices, respectively.

For the case of column vector c and row vector r, each with m components, the formula allows the quick calculation of the determinant of a matrix that differs from the identity matrix by a matrix of rank 1:

.

.

More generally, for any invertible m-by-m matrix X[1],

,

,

and

.

.

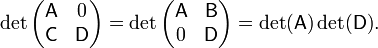

[edit]Block matrices

Suppose A, B, C, and D are n×n-, n×m-, m×n-, and m×m-matrices, respectively. Then

This can be seen from the Leibniz formula or by induction on n. When A is invertible, employing the following identity

leads to

When D is invertible, a similar identity with  factored out can be derived analogously[2], that is,

factored out can be derived analogously[2], that is,

factored out can be derived analogously[2], that is,

factored out can be derived analogously[2], that is,

Moreover, when the blocks are square matrices of the same order the following holds[3],

When C and D commute, that is CD=DC,  .

.

.

.

When A and C commute, that is AC=CA,  .

.

.

.

When B and D commute, that is BD=DB,  .

.

.

.

When A and B commute, that is AB=BA,  .

.

.

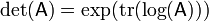

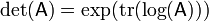

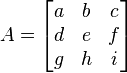

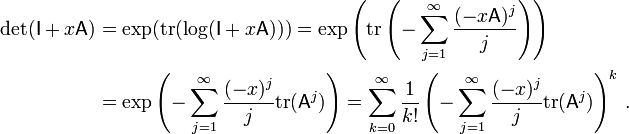

.[edit]Relationship to trace

As mentioned above, the determinant equals the product of the eigenvalues. Similarly, the trace equals the sum of the eigenvalues. Thus,

where  denotes the matrix exponential of

denotes the matrix exponential of  , because every eigenvalue λ of

, because every eigenvalue λ of  corresponds to the eigenvalue exp(λ) of

corresponds to the eigenvalue exp(λ) of  .

.

denotes the matrix exponential of

denotes the matrix exponential of  , because every eigenvalue λ of

, because every eigenvalue λ of  corresponds to the eigenvalue exp(λ) of

corresponds to the eigenvalue exp(λ) of  .

.

Under the substitution  ↦

↦  , the matrix logarithm of

, the matrix logarithm of  , the above equation yields

, the above equation yields  . Similarly,

. Similarly,  . The expression

. The expression  is closely related to the Fredholm determinant.

is closely related to the Fredholm determinant.

↦

↦  , the matrix logarithm of

, the matrix logarithm of  , the above equation yields

, the above equation yields  . Similarly,

. Similarly,  . The expression

. The expression  is closely related to the Fredholm determinant.

is closely related to the Fredholm determinant.[edit]Size-specific relationships to trace

For an  matrix

matrix  , these formulae can be used to derive:

, these formulae can be used to derive:

matrix

matrix  , these formulae can be used to derive:

, these formulae can be used to derive:- n = 1:

- n = 2:

- n = 3:

- n = 4:

These formulae are closely related to Newton's identities.

[edit]Proof Outline

is a polynomial in the variable x, has degree at most n, and the coefficient of xn is

is a polynomial in the variable x, has degree at most n, and the coefficient of xn is  . Working with

. Working with  small enough so that the power series for

small enough so that the power series for  converges absolutely, we compute

converges absolutely, we compute

Note that, despite appearances, each of these expressions simplifies to a polynomial of degree at most n. In particular, it is safe to ignore all xm terms, for m > n. The coefficients of x1, x2, x3, and x4 in this last expression give the above formulae.

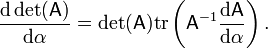

[edit]Derivative

By definition, e.g., using the Leibniz formula, the determinant of real (or analogously for complex) square matrices is a polynomial function from Rn×n to R. As such it is everywhere differentiable. Its derivative can be expressed using Jacobi's formula:

where adj(A) denotes the adjugate of A. In particular, if A is invertible, we have

Expressed in terms of the entries of A, these are

Yet another equivalent formulation is

,

,

using big O notation. The special case where A = I, the identity matrix, yields

This identity is used in describing the tangent space of certain matrix Lie groups.

If the matrix A is written as  where a, b, c are vectors, then the gradient over one of the three vectors may be written as the cross product of the other two:

where a, b, c are vectors, then the gradient over one of the three vectors may be written as the cross product of the other two:

where a, b, c are vectors, then the gradient over one of the three vectors may be written as the cross product of the other two:

where a, b, c are vectors, then the gradient over one of the three vectors may be written as the cross product of the other two:[edit]Natural transformation

A matrix over a commutative ring R is invertible if and only if its determinant is a unit in R. For a commutative ring R and a natural number n, det is a mapping between GLn(R) (the group of invertible n×n matrices with entries in R) and R× (the group of units in R), making detR (the specialization of det for a concrete commutative ring R) a morphism in the category of groups Grp. The category of commutative rings CRng and Grp are related by two functors GLn and ( )×, where GLn maps any given commutative ring R to the group GLn(R), and ( )× maps any given commutative ring R to the group R×. Every morphism f : R → S in CRng (with R and S being commutative rings) induces corresponding morphisms GLn f : GLn(R) → GLn(S) and f× : R× → S× in Grp, withdetS ° GLn f = f× ° detR. Therefore det is a natural transformation between GLn and ( )×.[4]

The above stated more briefly: Let R be a commutative ring. The map 'take-the-determinant' is a morphism of algebraic groups from GLn,R to Gm,R.

[edit]Abstract formulation

An n × n square matrix A may be thought of as the coordinate representation of a linear transformation of an n-dimensional vector space V. Given any linear transformation

we can define the determinant of A as the determinant of any matrix representing A. This is a well-defined notion (i.e. independent of a choice of basis) since the determinant is invariant under similarity transformations.

The determinant of a linear transformation A can be formulated in a coordinate-free manner, as follows. The set of alternating multilinear forms ΛnV is a vector space, on which A induces a linear transformation. Given φ ∈ ΛnV, define A(φ) ∈ ΛnV as

Because n is also the dimension of V, ΛnV is one-dimensional and this operation is just multiplication by some scalar that depends on A. This scalar is called the determinant of A. That is, det(A) is defined by the equation

To see that this definition agrees with the coordinate-dependent definition given above, one can use the characterization of the determinant given above: for example switching two columns changes the parity of the determinant; likewise, permuting the vectors in the exterior product v1 ∧ v2 ∧ ... ∧ vn to v2 ∧ v1 ∧ v3 ∧ ... ∧ vn, say, also alters the parity. Minors of a matrix can also be cast in this setting, by considering lower alternating forms ΛkV with k < dim V.

[edit]Algorithmic implementation

- The naive method of implementing an algorithm to compute the determinant is to use Laplace's formula for expansion by cofactors. This approach is extremely inefficient in general, however, as it is of order n! (n factorial) for an n×n matrix M.

- An improvement to order n3 can be achieved by using LU decomposition to write M = LU for triangular matrices L and U. Now, det M = det LU = det L det U, and since L and U are triangular the determinant of each is simply the product of its diagonal elements. Alternatively one can perform the Cholesky decomposition if possible or the QR decomposition and find the determinant in a similar fashion.

- If two matrices of order n can be multiplied in time M(n), where M(n)≥na for some a>2, then the determinant can be computed in time O(M(n)).[5] This means, for example, that an O(n2.376) algorithm exists based on the Coppersmith–Winograd algorithm.

- Since the definition of the determinant does not need divisions, a question arises: do fast algorithms exist that do not need divisions? This is especially interesting for matrices over rings. Indeed algorithms with run-time proportional to n4 exist. An algorithm of Mahajan and Vinay, and Berkowitz[6] is based on closed ordered walks (short clow). It computes more products than the determinant definition requires, but some of these products cancel and the sum of these products can be computed more efficiently. The final algorithm looks very much like an iterated product of triangular matrices.

- What is not often discussed is the so-called "bit complexity" of the problem, i.e. how many bits of accuracy you need to store for intermediate values. For example, using Gaussian elimination, you can reduce the matrix to upper triangular form, then multiply the main diagonal to get the determinant (this is essentially a special case of the LU decomposition as above), but the bit length of intermediate values can become exponentially long.[7] One could talk about when it is appropriate to round intermediate values, but an elegant way of calculating the determinant uses the Bareiss Algorithm, an exact-division method based on Sylvester's identity to give a run time of order n3 and bit complexity roughly the bit size of the original entries in the matrix times n.

[edit]History

Historically, determinants were considered without reference to matrices: originally, a determinant was defined as a property of a system of linear equations. The determinant "determines" whether the system has a unique solution (which occurs precisely if the determinant is non-zero). In this sense, determinants were first used in the Chinese mathematics textbook The Nine Chapters on the Mathematical Art (九章算術, Chinese scholars, around the 3rd century BC). In Europe, two-by-two determinants were considered by Cardano at the end of the 16th century and larger ones by Leibniz. [8][9][10][11]

It was Vandermonde (1771) who first recognized determinants as independent functions.[8] Laplace (1772) [12][13] gave the general method of expanding a determinant in terms of its complementary minors: Vandermonde had already given a special case. Immediately following, Lagrange (1773) treated determinants of the second and third order. Lagrange was the first to apply determinants to questions of elimination theory; he proved many special cases of general identities.

Gauss (1801) made the next advance. Like Lagrange, he made much use of determinants in the theory of numbers. He introduced the word determinants (Laplace had used resultant), though not in the present signification, but rather as applied to thediscriminant of a quantic. Gauss also arrived at the notion of reciprocal (inverse) determinants, and came very near the multiplication theorem.

The next contributor of importance is Binet (1811, 1812), who formally stated the theorem relating to the product of two matrices of m columns and n rows, which for the special case of m = n reduces to the multiplication theorem. On the same day (November 30, 1812) that Binet presented his paper to the Academy, Cauchy also presented one on the subject. (See Cauchy-Binet formula.) In this he used the word determinant in its present sense,[14][15] summarized and simplified what was then known on the subject, improved the notation, and gave the multiplication theorem with a proof more satisfactory than Binet's.[8][16] With him begins the theory in its generality.

The next important figure was Jacobi[9] (from 1827). He early used the functional determinant which Sylvester later called the Jacobian, and in his memoirs in Crelle for 1841 he specially treats this subject, as well as the class of alternating functions which Sylvester has called alternants. About the time of Jacobi's last memoirs, Sylvester (1839) and Cayley began their work.[17][18]

The study of special forms of determinants has been the natural result of the completion of the general theory. Axisymmetric determinants have been studied by Lebesgue, Hesse, and Sylvester; persymmetric determinants by Sylvester and Hankel;circulants by Catalan, Spottiswoode, Glaisher, and Scott; skew determinants and Pfaffians, in connection with the theory of orthogonal transformation, by Cayley; continuants by Sylvester; Wronskians (so called by Muir) by Christoffel and Frobenius; compound determinants by Sylvester, Reiss, and Picquet; Jacobians and Hessians by Sylvester; and symmetric gauche determinants by Trudi. Of the text-books on the subject Spottiswoode's was the first. In America, Hanus (1886), Weld (1893), and Muir/Metzler (1933) published treatises.

Axler in 1995 attacked determinant's place in Linear Algebra. He saw it as something to be derived from the core principles of Linear Algebra, not to be used to derive the core principles.[19]

[edit]See also

- Dieudonné determinant

- Matrix determinant lemma

- Permanent

- Pfaffian

- Minor (linear algebra)

- Trace (linear algebra)

- Slater determinant

- Pseudo-determinant

[edit]Notes

- ^ Proofs can be found in http://web.archive.org/web/20080113084601/http://www.ee.ic.ac.uk/hp/staff/www/matrix/proof003.html

- ^ These identities were taken http://www.ee.ic.ac.uk/hp/staff/dmb/matrix/proof003.html

- ^ Proofs are given at http://www.mth.kcl.ac.uk/~jrs/gazette/blocks.pdf

- ^ Mac Lane, Saunders (1998), Categories for the Working Mathematician, Graduate Texts in Mathematics 5 ((2nd ed.) ed.), Springer-Verlag, ISBN 0-387-98403-8

- ^ J.R. Bunch and J.E. Hopcroft, Triangular factorization and inversion by fast matrix multiplication, Mathematics of Computation, 28 (1974) 231–236.

- ^ http://page.inf.fu-berlin.de/~rote/Papers/pdf/Division-free+algorithms.pdf

- ^ Fang, Xin Gui; Havas, George (1997). "On the worst-case complexity of integer Gaussian elimination". Proceedings of the 1997 international symposium on Symbolic and algebraic computation. ISSAC '97. Kihei, Maui, Hawaii, United States: ACM. pp. 28-31.doi:http://doi.acm.org/10.1145/258726.258740. ISBN 0-89791-875-4.

- ^ a b c Campbell, H: "Linear Algebra With Applications", pages 111-112. Appleton Century Crofts, 1971

- ^ a b Eves, H: "An Introduction to the History of Mathematics", pages 405, 493–494, Saunders College Publishing, 1990.

- ^ A Brief History of Linear Algebra and Matrix Theory : http://darkwing.uoregon.edu/~vitulli/441.sp04/LinAlgHistory.html

- ^ Cajori, F. A History of Mathematics p. 80

- ^ Expansion of determinants in terms of minors: Laplace, Pierre-Simon (de) "Researches sur le calcul intégral et sur le systéme du monde," Histoire de l'Académie Royale des Sciences (Paris), seconde partie, pages 267-376 (1772).

- ^ Muir, Sir Thomas, The Theory of Determinants in the historical Order of Development [London, England: Macmillan and Co., Ltd., 1906].

- ^ The first use of the word "determinant" in the modern sense appeared in: Cauchy, Augustin-Louis “Memoire sur les fonctions qui ne peuvent obtenir que deux valeurs égales et des signes contraires par suite des transpositions operées entre les variables qu'elles renferment," which was first read at the Institute de France in Paris on November 30, 1812, and which was subsequently published in the Journal de l'Ecole Polytechnique, Cahier 17, Tome 10, pages 29-112 (1815).

- ^ Origins of mathematical terms: http://jeff560.tripod.com/d.html

- ^ History of matrices and determinants: http://www-history.mcs.st-and.ac.uk/history/HistTopics/Matrices_and_determinants.html

- ^ The first use of vertical lines to denote a determinant appeared in: Cayley, Arthur "On a theorem in the geometry of position," Cambridge Mathematical Journal, vol. 2, pages 267-271 (1841).

- ^ History of matrix notation: http://jeff560.tripod.com/matrices.html

- ^ Down with Determinants: http://www.axler.net/DwD.html

[edit]References

See also: Linear algebra#Further reading

- Axler, Sheldon Jay (1997), Linear Algebra Done Right (2nd ed.), Springer-Verlag, ISBN 0387982590

- de Boor, Carl (1990), "An empty exercise", ACM SIGNUM Newsletter 25 (2): 3–7, doi:10.1145/122272.122273.

- Lay, David C. (August 22, 2005), Linear Algebra and Its Applications (3rd ed.), Addison Wesley, ISBN 978-0321287137

- Meyer, Carl D. (February 15, 2001), Matrix Analysis and Applied Linear Algebra, Society for Industrial and Applied Mathematics (SIAM), ISBN 978-0898714548

- Poole, David (2006), Linear Algebra: A Modern Introduction (2nd ed.), Brooks/Cole, ISBN 0-534-99845-3

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Leon, Steven J. (2006), Linear Algebra With Applications (7th ed.), Pearson Prentice Hall

[edit]External links

The Wikibook Linear Algebra has a page on the topic of

|

- Linear Systems Chapter from "Fundamental Problems of Algorithmic Algebra" Chee Yap's chapter on Linear Systems describing implementation aspects of Determinant computation.

- Mahajan, Meena and V. Vinay, “Determinant: Combinatorics, Algorithms, and Complexity”, Chicago Journal of Theoretical Computer Science, v. 1997 article 5 (1997).

- WebApp to calculate determinants and descriptively solve systems of linear equations

- Online Matrix Calculator

- Linear algebra: determinants. Compute determinants of matrices up to order 6 using Laplace expansion you choose.

- Matrices and Linear Algebra on the Earliest Uses Pages

- Determinants explained in an easy fashion in the 4th chapter as a part of a Linear Algebra course.

- Instructional Video on taking the determinant of an nxn matrix (Kahn Academy)

- Online matrix calculator (determinant, track, inverse, adjoint, transpose) Compute determinant of matrix up to order 8

Yours Sincerely. David

![\begin{align}

\sum_{\sigma \in S_n} \sgn(\sigma) \prod_{i=1}^n A_{i,\sigma_i}

&=\sgn([1,2,3]) \prod_{i=1}^n A_{i,[1,2,3]_i} + \sgn([1,3,2]) \prod_{i=1}^n A_{i,[1,3,2]_i} + \sgn([2,1,3]) \prod_{i=1}^n A_{i,[2,1,3]_i} \\ &+ \sgn([2,3,1]) \prod_{i=1}^n A_{i,[2,3,1]_i} + \sgn([3,1,2]) \prod_{i=1}^n A_{i,[3,1,2]_i} + \sgn([3,2,1]) \prod_{i=1}^n A_{i,[3,2,1]_i}

\\

&=\prod_{i=1}^n A_{i,[1,2,3]_i} - \prod_{i=1}^n A_{i,[1,3,2]_i} - \prod_{i=1}^n A_{i,[2,1,3]_i} + \prod_{i=1}^n A_{i,[2,3,1]_i} + \prod_{i=1}^n A_{i,[3,1,2]_i} - \prod_{i=1}^n A_{i,[3,2,1]_i}

\\

&=A_{1,1}A_{2,2}A_{3,3}-A_{1,1}A_{2,3}A_{3,2}-A_{1,2}A_{2,1}A_{3,3}+A_{1,2}A_{2,3}A_{3,1}+A_{1,3}A_{2,1}A_{3,2}-A_{1,3}A_{2,2}A_{3,1}

\end{align}](http://upload.wikimedia.org/math/0/6/a/06a2ac5b3b4d3cdde2b08a176dc9cc22.png)

No hay comentarios:

Publicar un comentario

Ayudenos a mejorar el blog con su opinion...